Containers have rapidly replaced virtual machines in cloud deployments, while Kubernetes serves to automate and manage container deployment. Kubernetes is widely utilized by IT providers who offer cloud computing or containerization solutions to businesses.

Collaboration between their clientele and them in meeting various Kubernetes requirements related to niche markets and services is their speciality, while when companies embark on a container journey, specialist hiring must occur before beginning to learn basics themselves; hiring Kubernetes provider services may be helpful in many other ways as well.

Kubernetes (K8s), with its complex configurations and lack of available in-house skills to troubleshoot Kubernetes Clusters on-premise or in the cloud, has proven challenging for Kubernetes consultants and operation teams who must now adapt. Although K8 adoption continues to rise rapidly, many developers and operational teams still need to familiarize themselves with it and must learn its tools, workflows, and terms before adopting this technology fully.

What is Kubernetes?

Kubernetes was initially developed based on 15 years of production workload experience at Google before being open-sourced for broader use in 2014. Since then, its ecosystem of Kubernetes deployment best practices has grown substantially to support some of the largest software services worldwide.

Containers provide a lightweight way of virtualizing infrastructure optimized for cloud-native apps. Users can split machines up into separate instances of OS or applications while still maintaining isolation among workloads; this enables greater resource utilization and faster start-up times.

What is so scary about Kubernetes?

Kubernetes changed the way that organizations use containers. It still comes with its challenges, which many are still attempting to overcome. Canonical’s “Kubernetes & cloud-native operations report, 2021” contains many contributions from leading industry organizations, such as Google, Amazon, and CNCF.

Although many organizations use Kubernetes or plan to implement it in the future, the majority (54.5%) report that the lack of internal skills and limited resources are the biggest challenges they face. Other reported challenges include:

- Users have difficulty training

- Security and compliance issues

- Monitoring and observability

- Cost overruns

- The speed of Kubernetes’ evolution

- Day-to-day operations that are inefficient

Gartner’s recent survey reveals similar results, stating that the most significant challenges for CIOs post-Covid include managing technical debts and filling in skill gaps. Many solutions have been developed to simplify the transition to the cloud-native era for organizations.

Can Cloud Computing Deliver?

Kubernetes’ design has ensured that all is not lost. Kubernetes’ exponential growth fuels innovation and replaces legacy management practices. Kubernetes uses Everything-as-Code to define the state of resources, ranging from simple compute nodes to TLS certificates. Kubernetes requires the use of three main design constructs.

- Standard interfaces reduce friction in integration between internal and external components

- Standardize all CRUD operations (Create, Read, Update, Delete) using only APIs.

- Use of YAML to define the desired states for these components in a simple, readable manner

If you want to make it easier for cross-functional teams to adopt an automation platform, these three components are essential. It blurs the lines between departments, allowing for better collaboration among teams.

Customers and partners who adopt Kubernetes have reached a hyper-automation state. Kubernetes encourages teams to embrace multiple DevOps practices and foundations, such as EaC, Git version control, peer reviews, and documentation as code, while also encouraging cross-functional collaboration. These practices mature a team’s automation skills and help them get a head start on GitOps CI/CD pipelines that deal with application lifecycle and infrastructure.

Kubernetes Challenges and Solutions

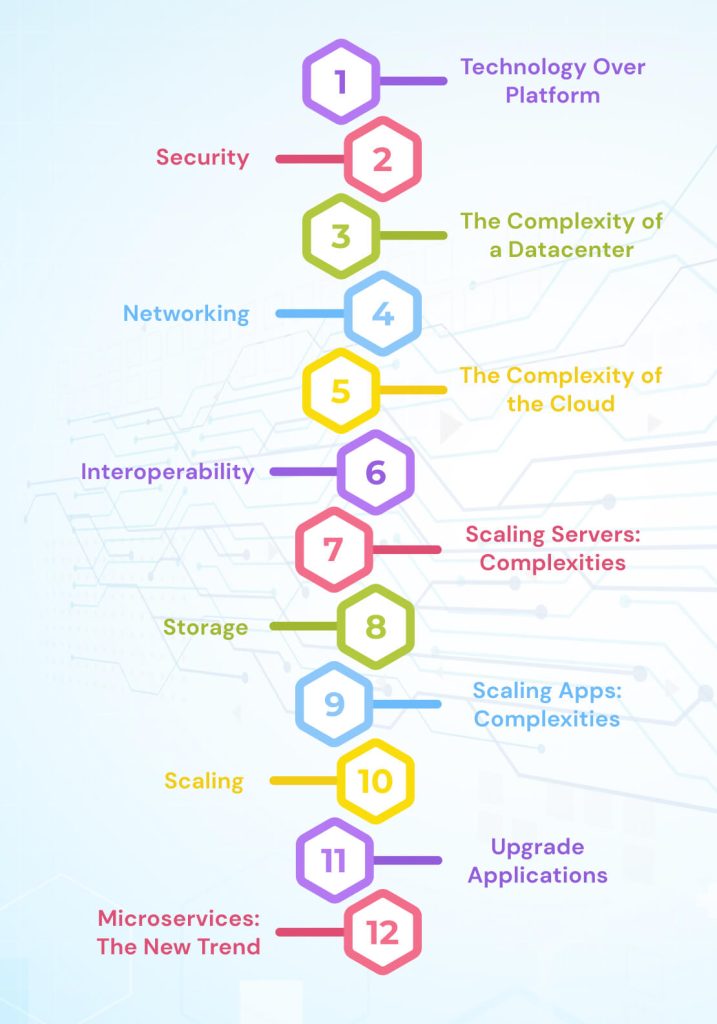

It is essential that you manage the risks associated with Kubernetes consulting services. So here are some of the most common challenges associated with Kubernetes and how Kubernetes consultants can help in overcoming these challenges.

Technology Over Platform

Kubernetes is itself a platform.

This is a very large platform. It has been called:

- Cloud data centres

- Cloud computing operating system

There’s a good reason behind this decision – much is going on under the surface of Kubernetes. Now let’s remember why Kubernetes exists for us all to use!

Kubernetes manages and scales Pods, which are containers. Containers run applications. Applications can be scripts, front-end apps, or back-end apps.

Kubernetes orchestrates pods for us:

- Schedule the Pods so that they run on specific nodes.

- The Pods automatically scale up or down.

- The same application can be run on multiple pods using replicas.

- Pods are exposed as services so that users and other Pods within the Kubernetes Cluster can access them.

Kubernetes is a manager and orchestrator of applications.

Kubernetes solves the core problem of managing containerized applications at scale.

Kubernetes is just one of many platforms that support this effort; remember the principle of “technology over platforms”. Kubernetes doesn’t do anything new – rather it simply improves upon what already existed.

Kubernetes provides solutions to problems by acting as an orchestrator, manager and curator for apps.

Security

Kubernetes can present challenges due to its complex architecture and vulnerability; proper monitoring may make identifying vulnerabilities easier, though multiple containers operating can make this harder than expected – giving hackers easier entry points into systems.

Avoid these security threats by following the steps below.

- AppArmor, SELinux, and other modules can be used to improve security.

- Role-based Access Control (RABC) is enabled. RABC enforces mandatory authentication of every user and controls the data access by each individual. The role played by the user determines access rights.

- The private key can be hidden to ensure maximum security when you use separate containers. Front-end and rear-end containers can be separated by regulating interaction.

The Complexity of a Datacenter

You’ll see something like this when you run virtual machines in a data centre.

- Datacenters themselves

- Racks of servers

- Network equipment (firewalls, routers, switches, etc.)

- Cables in abundance

You’ll still need the data centre and racks of servers, as well as network equipment and cables if you run Kubernetes locally. You won’t be able to run applications on virtual hardware like ESXi, scaled out across many servers.

Kubernetes lets you manage one system, saving time compared to managing virtualized hardware. You will only have to deal with one API rather than many UIs, automation methods, and configurations on countless servers. You’re likely to run something other than Kubernetes directly on bare-metal servers. You’ll likely use OpenStack or hybrid cloud models that will remove the need to manage bare metal servers directly.

You’ll be able to manage your environment using an API instead of clicking on buttons and having many scripts automate for you.

Networking

Kubernetes needs help to integrate traditional networking approaches. The result is that multi-tenancy and complexity are some of the problem areas.

Kubernetes becomes very complex when deployed across multiple cloud infrastructures. It can occur when workloads are migrated from Kubernetes to VMs or other architectures.

Kubernetes’ static IP addresses and ports can also lead to such problems. It is hard to implement an IP-based policy because pods can use an unlimited number of IPs.

When multiple workloads share resources, multi-tenancy issues occur. When resources are misallocated, it can affect other workloads in the environment.

CNI (container networking interface) plug-in is used to solve network challenges. Kubernetes can integrate seamlessly into the infrastructure and access applications across different platforms.

Service mesh can help in solving this issue. It is a layer of infrastructure that can be inserted into an app to handle network-based communication using APIs.

The Complexity of the Cloud

The main difference between managing hardware in a data centre and the cloud when you move workloads is that you no longer manage it. You’re still responsible for everything else, including:

- Scaling applications

- Replicas of the Applications

- Applications that automatically heal themselves

- You can manage the application and interact with it in several ways.

- Automate the entire list.

Cloud computing has made life much easier. However, the complexities of managing hardware and other software are still the same.

Kubernetes is meant to make it easier. Take the cloud for example. You can automatically scale out a node if you run Kubernetes on the cloud. The Kubernetes Cluster is automatically connected, and a worker node is created. If a worker node is required for Pods, this will happen automatically based on the Kubernetes scheduler.

The control plane has been abstracted, which is a huge benefit. It’s another abstraction layer and another level of complexity you don’t need to worry about.

You can still create auto-scaling virtual machines without Kubernetes. However, you must still automate and repeat processes to move your workloads to virtual machines.

Running Kubernetes both in and outside the cloud can solve the infrastructure scalability problems.

Interoperability

Sometimes, interoperability is a major Kubernetes problem. Communication between apps can be difficult when interoperable cloud-native apps are enabled on Kubernetes. It can affect the deployment of clusters, as app instances may not work correctly on specific nodes.

Kubernetes is less effective in production than development, staging, or quality assurance (QA). Migration to an enterprise production environment can create many complex issues in governance, performance, and interoperability.

To reduce interoperability issues, the following measures can be taken:

- The same API, command-line, and UI

- Open Service Broker API can be used to enable cloud-native apps that are interoperable and increase portability.

- Utilizing collaborative projects between different organizations (Google, SAP, Red Hat, IBM, IBM) to provide service for apps running on cloud-native platforms

Scaling Servers: Complexities

It takes work to have high availability and scale across virtualized or bare metal servers.

There are two ways to scale servers:

- Hot

- Cold

“Hot” servers refer to servers that are actively running but do not currently handle workload. Such machines remain ready and waiting in case any additional workload comes their way.

“Cold” servers may have an image of the required application, binaries, and dependencies but are turned off.

You will have to manage, pay for, and automate the maintenance of these servers in both scenarios.

Kubernetes allows you to add a worker node or control plane easily. You’ll have to maintain the worker node (updates) control plane (management, maintenance, etc.) depending on where you deploy it. It’s still much less work than managing a server running an application.

Scaling, in general, is a complex task. It takes several repeatable steps to make a server look and behave the same as other servers. When installing a worker node or control plane with Kubernetes (say, Kubeadm), it is a simple one-line command on the server. After a few moments, you have officially scaled out.

Storage

Kubernetes can be problematic for large organizations, particularly those with on-premises server infrastructure. Kubernetes consultant manage their storage infrastructure without having to rely on cloud resources. This leads to more vulnerabilities and memory problems.

To overcome these issues, the best solution is to migration to a public cloud environment and reduce reliance on local servers. Other solutions include:

- Ephemeral storage refers to temporary volatile storage attached to instances for their life and holds data such as caches, swap volumes, session data, Buffers, etc.

- Persistent storage refers to storage volume connected to stateful applications such as databases. They can still be used after the container’s life expires.

- Volume claims, classes, storage, and stateful sets can solve other storage and scaling issues.

Scaling Apps: Complexities

Kubernetes creates a container-centric system.

Other environments may include components such as:

- Bare Metal

- Virtual Machines

- Both are a little bit of each

Kubernetes is a containerized environment.

Containerized environments are complex by themselves, but the great thing is that you only need to plan and create one type of environment. The same goes for scaling applications.

Scaling an application in a traditional environment requires a lot more planning. The workflows ensure an application can function properly even if the server load increases. The workflow looks like this:

- The auto-scaling group creates a new server

- The server is configured

- The server installs all application dependencies

- The application is deployed

- Testing is done to make sure that the application functions properly

These steps are complex, even at a high level. It is incredible how much automation code goes into the configuration of a server.

Kubernetes does it all for you. If a pod cannot get resources from one worker node, it will move to the next. You can set the minccount and maxcount of replicas you want to deploy for a pod. If the maximum count is too low, update it and redeploy Kubernetes Manifest.

Kubernetes may be complex, but it is incredible how easily you can scale applications.

Scaling

A poorly equipped infrastructure can be a severe disadvantage to an organization. Diagnosing and fixing any problems can be a daunting task because Kubernetes generates many data and is complex.

Automation is essential for scaling up. Outages can be damaging for any business in terms of revenue as well as user experience. Kubernetes-based services for customers also take a hit.

Organizations face a problem made worse by the dynamic nature of computing environments and software density. There would be

- Difficulty managing multiple clouds, designated Users, Clusters or Policies

- Installations and configurations complexes

- Different user experiences depending on the environment

- The Kubernetes Infrastructure may not be compatible with all tools. Integration errors can make expansion difficult.

The autoscaling API or the v2beta2 version allows you to specify several metrics for the autoscaling horizontal pod.

Kubernetes can be integrated with production environments through open-source container management software, providing seamless application management and scaling on either premises or cloud environments. Container managers fulfill many functions related to Kubernetes:

- Joint Infrastructure Management across Clouds and Clusters

- Easy configuration and deployment with a user-friendly interface

- Easy-to-scale pods and clusters

- Project guidelines, management of workload, and RABC

Upgrade Applications

Upgrades are also necessary for applications. On a server, the workflow looks like this:

- SSH is a way to connect to a server.

- Copy the binary file to the server

- Close the service

- Add the new binary

- Start the service

- All is well, I hope

Canary Deployments are similar to rolling updates. Imagine you have three copies of the Deployment. A Rolling Update is a way to roll out a new version on one pod, test it, and then move on to the next. This is a much easier approach than on bare metal or virtual machines.

Microservices: The New Trend

Monolithic apps are tightly coupled applications. This means that if an update is needed on one part, it will affect the rest.

Microservices are pieces of an application. Let’s say, for example, you have a front-end and middleware. In a monolithic system, the codebase for all three details would be one. Microservices are composed of three parts. If one component changes, it won’t affect any others.

Since Pods operates as intended, splitting an application into separate microservices does not make sense. Or, at the very least, you can split the codebase into separate repositories and create a container image per codebase.

It’s been a huge help to Kubernetes consulting companies in enabling microservices because Kubernetes’s Pod creation is simple.

Kubernetes Advantages

- Kubernetes distributes traffic and balances load so that deployments are stable.

- Kubernetes allows you to mount any storage system you want, including on-premises/local, public cloud, etc.

- Kubernetes can create and deploy new containers automatically, remove existing containers as needed, and add resources to newly created containers.

- Kubernetes uses the CPU and RAM of each container to run a task.

- Kubernetes has self-healing features, such as restarting containers when they fail, replacing containers, and removing containers if they do not pass user-defined health tests.

- Kubernetes can store sensitive data, including passwords, SSH keys, OAuth tokens, and SSH credentials.

The Key Takeaway

Containerization can be mastered by running Kubernetes correctly. Many organizations hire consulting firms to help them with internal and external issues. Kubernetes management is a challenge due to security and storage concerns.

Kubernetes may appear complex at first, but once you understand its depths, you’ll realize its complexity stems from being an entirely novel way of managing applications. Kubernetes provides powerful benefits that reduce complexity across environments like virtual private servers.